GRPC is a modern, open-source, high-performance RPC framework that can run in any environment. It can efficiently connect services in and across data centers with pluggable support for load balancing, tracing, health checking, and authentication. It is also applicable in the last mile of distributed computing to connect devices, mobile applications, and browsers to backend services.

In this document, our focus is on trying to explain gRPC as best as we can, based on the things that we have touched and things we have done while using gRPC as our main technology.

The project is open source and anybody is free to use, study, modify and distribute for any non-commercial purpose.

You can find it under my gRPC GitHub repository: https://github.com/flakrimjusufi/grpc-with-rest

We are going to split our Proof of Concept into three different chapters:

- Installing gRPC in our local environment

- Using gRPC with Go as language

- Conclusions

Installing gRPC in Our Local Environment

I’m running on Linux (Ubuntu 20.04) and all the installation instructions are for Linux, with no coverage for Windows or Mac. For more details, check gRPC’s official blog: gRPC - now with easy installation.

There are some prerequisites for installing gRPC in your environment:

- Where to use gRPC i.e. choosing a language in which you want to use gRPC - there are lots of supported languages (

Supported languages ).

- I’ve chosen GO language and I’ve used version 1.16.5.

- Protocol buffer compiler protoc [version 3] - For installation instructions, please refer to this link: Protocol Buffer Compiler Installation

- The following command was used to install protoc:

apt install -y protobuf-compiler- After that, the following command was used to ensure that protoc was successfully installed:

protoc --version- Your chosen language plugin for protocol compiler - Since I’m using Go, I’ll stick with Go plugins for protocol compiler.

- To complete the installation, we need to use the following commands:

go install google.golang.org/protobuf/cmd/protoc-gen-go@v1.26go install google.golang.org/grpc/cmd/protoc-gen-go-grpc@v1.1- After that, we just need to update the GO PATH so that protoc compiler can find the plugins:

export PATH="$PATH:$(go env GOPATH)/bin"Using gRPC With Go as Language

After completing the installation of all the prerequisites, I started developing. My goal was to Investigate gRPC vs REST and to help the team decide which one is better for our new platform. I have tried to touch different features of gRPC with JSON with some key points in mind which we will discuss in the below sections:

- Integration of gRPC / easiness of use

- Advantages / Disadvantages

- gRPC vs HTTP

- Integrating two or more gRPC services

- gRPC Worthiness

The initial idea was to create a project in which we are going to see all the benefits from gRPC, specifically to explore the possibilities with gRPC-gateway, which is a plugin of the Google protocol buffers compiler protoc.

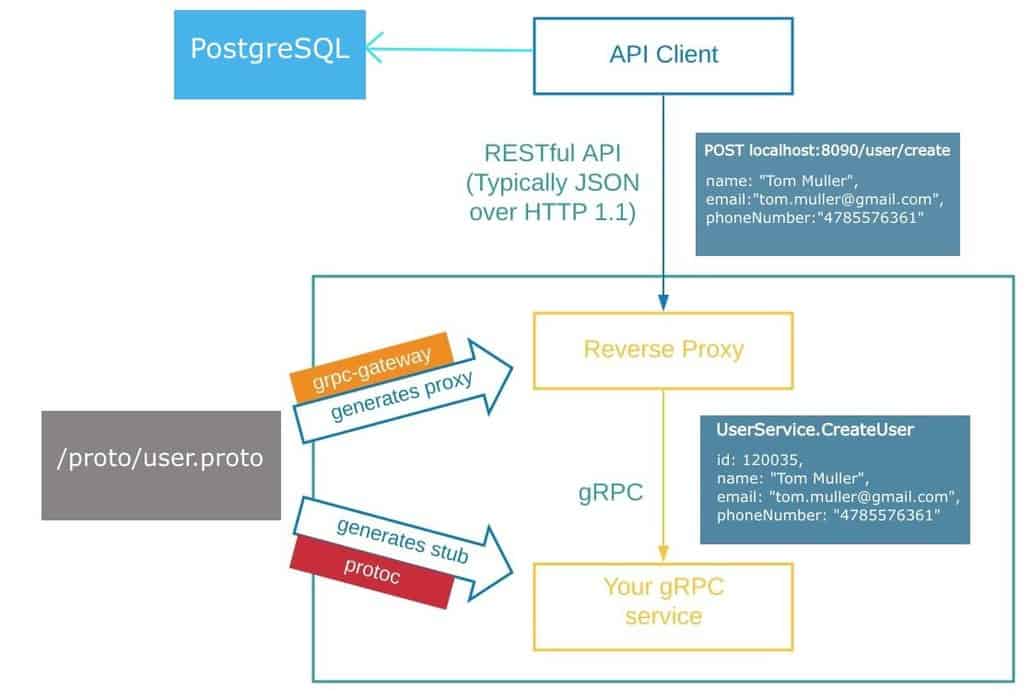

This plugin reads protobuf service definitions and generates a reverse-proxy server which translates a RESTful HTTP API into gRPC. The server is generated according to the google.api.http annotations in our service definitions under the proto file, something that we are going to cover later. With grpc-gateway, we wanted to have a system in which both gRPC and RESTful style are integrated.

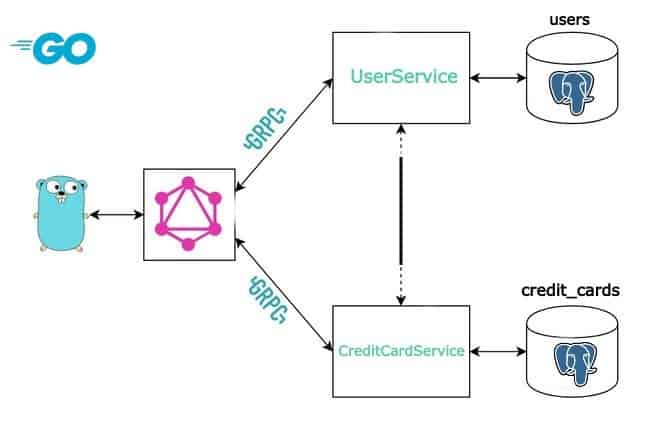

Basically, what we wanted to achieve is described in the picture below:

According to the architecture diagram, we have:

- A proto file in which we define the entire data structure

- Protoc to generate stubs (client interface)

- gRPC-gateway to generate proxy

- API client - RESTful API

- PostgreSQL database

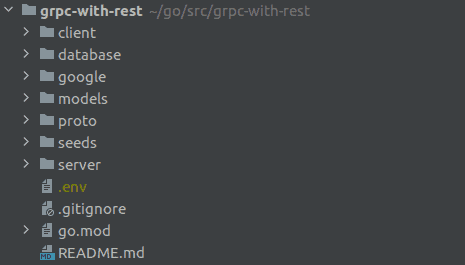

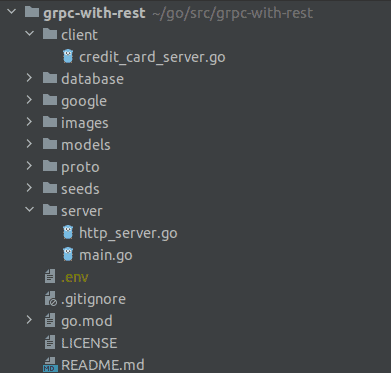

And this is how our project structure looks like:

We are going to start with the proto directory, which is the most important one and deserves more attention.

This is the place where we define our gRPC services and all data structures.

Trying to implement the best practices, we decided to have a unique directory in which we will have all the proto files. For this purpose, we have created a proto directory under which the user.proto file is located. In the user.proto file we can find the entire gRPC services and data structures that we are going to use for our project.

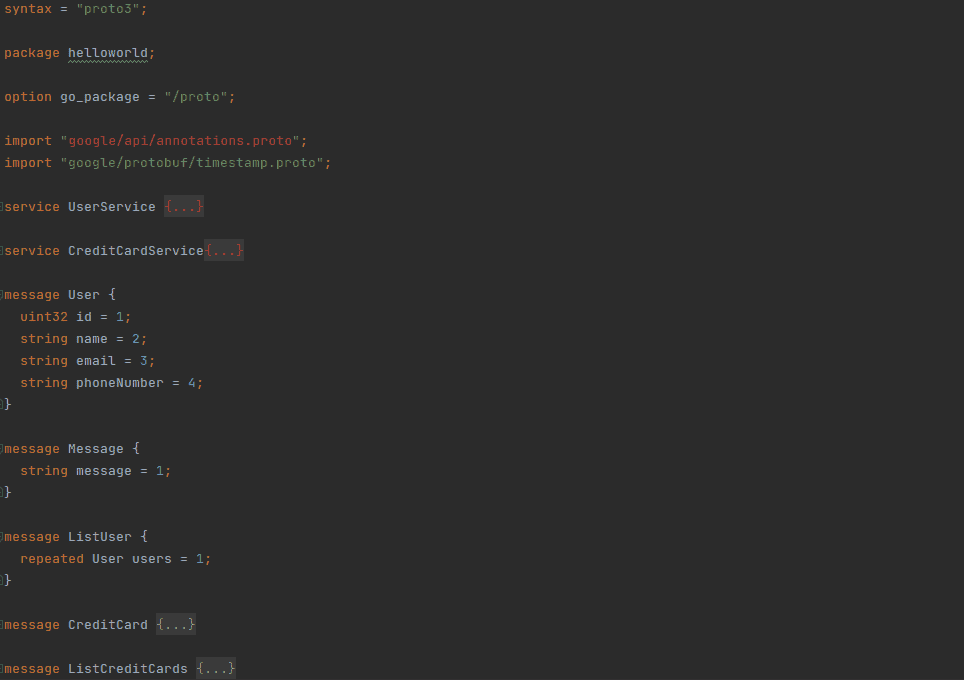

This is how user.proto file in our project looks like:

We will go line by line explaining what each one does.

syntax = "proto3";In the first line, we define the syntax which should match the version of protoc. In my case, it is “proto3” since I’m using the latest one (version 3 currently).

package helloworld;After the syntax, there’s the package that needs to be defined. This should be a unique identifier in order to prevent duplication.

option go_package = "/proto";Going further to the next line, it’s important to highlight that since version 3, protoc made option go_package a required parameter and without defining this parameter your proto file will not be compiled.

This is the parameter in which you define the directory in which your proto file is located. In my case, it is “/proto”.

If you don’t define this parameter, you will get an error like the one below once you try to compile your proto file:

Please specify either:

• a "go_package" option in the .proto source file, or

• a "M" argument on the command line.

See https://developers.google.com/protocol-buffers/docs/reference/go-generated#package for more information.

--go_out: protoc-gen-go: Plugin failed with status code 1.Moving to the next line, we are importing an important module for our services.

import "google/api/annotations.proto";

import "google/protobuf/timestamp.proto";The first one is google/api/annotations.proto and the second is google/protobuf/timestamp.proto. We will explain both of them in more detail since I consider this part as a pain point that took most of my time while working on this project.

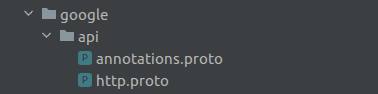

→ google/api/annotations.proto is a required third party protobuf file for the protoc compiler. If you don’t have this dependency inside your project, you can’t define your API calls (GET, POST, PUT, PATCH, DELETE) inside your services and you won’t be able to Transcode HTTP/JSON to gRPC.

Interesting enough, there’s currently no standard way to import this dependency. Even if you add the above lines inside your proto file, the protoc compiler won’t import this dependency for you because you have to do this manually.

To my surprise, this way of importing third-party dependencies is also encouraged by the grpc-gateway plugin.

After wasting a few days to find a proper solution, I felt tired and overwhelmed by this issue and I decided to import the dependencies manually.

So, what I did was the following:

- I went into googleapis github repo in which annotations.proto and http.proto files are defined [https://github.com/googleapis/googleapis/blob/master/google/api]

- I copied the files [annotations.proto and http.proto]

- I created a directory named google and I added another folder inside this directory named api so the path would match the required import in my proto file → google/api/annotations.proto

- I pasted the files inside the directory

After completing the above steps, the project structure in the defined directory looked like this:

Now I was able to add HTTP calls and to compile my user.proto file.

Moving to the next import:

import "google/protobuf/timestamp.proto";→ google/protobuf/timestamp.proto - a third-party dependency designed for timestamps. Google encourages the use of timestamps for better handling across machines. This module is independent of any calendar and concepts like “day” or “month”. It is related to Timestamp in that the difference between two Timestamp values is a Duration and it can be added or subtracted from a Timestamp.

Moving to the next lines, we have defined two services in our user.proto file.

- UserService

- CreditCardService

Like many RPC systems, gRPC is based around the idea of defining a service, specifying the methods that can be called remotely with their parameters and return types. UserService and CreditCardService, even if they are different from each other and each one has its own logic and data structure, they use the same pattern. We will take two examples from both of them and we’ll try to explain in a bit more detail what they do.

The idea behind UserService was to create a server-client cycle in which we will use HTTP calls (Transcoding HTTP/JSON to gRPC) to interact with a PostgreSQL database that has a table named users. This table has 100k records that contain the user’s personal information. To interact with the database, we have used the GORM framework.

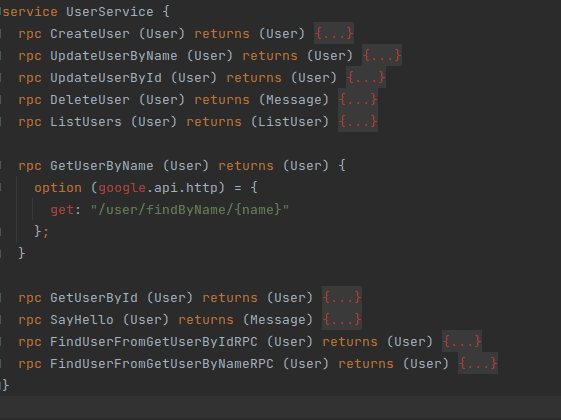

Let’s start explaining in more detail what UserService does. This is how the code looks like:

service UserService {

rpc CreateUser (User) returns (User) {

option (google.api.http) = {

post: "/user/create"

body: "*"

};

}

}The keyword service is for defining a unique identifier for the services that you will define in your proto file, in my case it’s UserService.

Inside UserService, we have defined an rpc (reserved keyword for RPC) call CreateUser which takes (User) as input and returns (User) as output.

Now, what does this all mean? We will explain more in the section below.

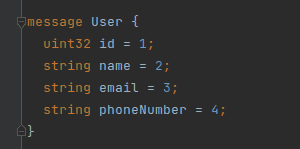

User is a data structure (called message in gRPC) in which you can specify your field types, their naming and their positions inside the struct. Let’s dive into the code explaining line by line what this means:

message User {

uint32 id = 1;

string name = 2;

string email = 3;

string phoneNumber = 4;

}message is again a reserved keyword that you use for defining your data structure, in my case User.

Inside User data structure, we have defined:

| Data type | Name | Position |

| uint32 | id | 1 |

| string | name | 2 |

| string | 3 | |

| string | phoneNumber | 4 |

This is the data structure we are using as input and also as output in our rpc call CreateUser which belongs to UserService.

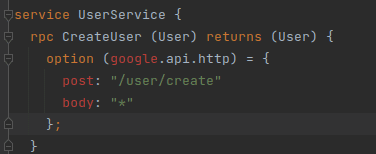

Going further in the next few lines inside our UserService:

option (google.api.http) = {

post: "/user/create"

body: "*"

};This is the part where we are telling our service that we are going to use HTTP as our transcoding to gRPC and this option requires two parameters inside the body:

- post - which refers to the POST request and after the “:” you have to define the endpoint that you are going to use in order to hit this rpc call.

- body - which refers to the input that you are willing to accept for this endpoint, in my case it’s “*” which refers to “accepting everything which comes as input” (JSON, XML, YAML, Plain Text, etc).

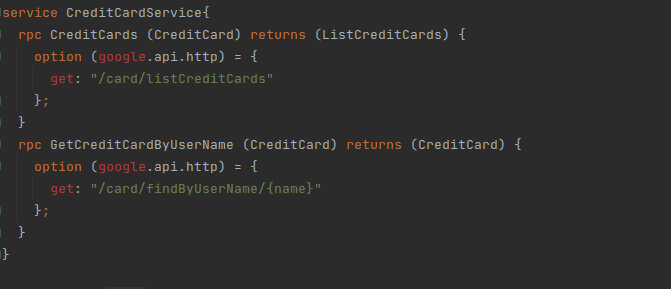

After we are done with the definition of UserService, we will go briefly just for the sake of clarity to explain what CreditCardService does.

service CreditCardService{

rpc GetCreditCardByUserName (CreditCard) returns (CreditCard) {

option (google.api.http) = {

get: "/card/findByUserName/{name}"

};

}

}Inside CreditCardService we have defined an rpc call GetCreditCardByUserName which takes a CreditCard as input and returns a CreditCard as output.

CreditCard is again a data structure (message) which contains data types, naming and positions.

This is how the CreditCard looks like:

message CreditCard {

uint32 id = 1;

string name = 2;

string email = 3;

string phoneNumber = 4;

string address = 5;

string country = 6;

string city = 7;

string zip = 8;

string cvv = 9;

google.protobuf.Timestamp created_at = 10;

google.protobuf.Timestamp updated_at = 11;

google.protobuf.Timestamp deleted_at = 12;

}In this data structure, we have defined more fields and we have added Timestamp data type for created_at, updated_at and deleted_at fields, something that we didn’t do in our previous gRPC JSON example.

Continuing to the next few lines in our CreditCardService:

option (google.api.http) = {

get: "/card/findByUserName/{name}"

};Here, we are again telling our service that we are going to use HTTP as our transcoding to gRPC and this gRPC to HTTP option requires just one parameter inside the body:

- get - which refers to GET request and will accept a {name} at the end of our defined endpoint, something like this: /card/findByUserName/Flakrim

Since this is a GET request, we don’t have to define a ‘body’ as we did in the above example when we created a POST request.

After we are done with the definition of services and we have covered almost everything in our proto file, it’s time for the most interesting part in the gRPC ecosystem - the compiling.

To compile our proto file we are going to use this command:

protoc --go_out=. --go_opt=paths=source_relative \

--go-grpc_out=. --go-grpc_opt=paths=source_relative \

proto/user.protoIt took me a while to figure out and to understand the process of how gRPC works and what goes behind this command but I’ll try to explain it briefly:

- protoc --go_out=. --go_opt=paths=source_relative: This is protoc compiler asking for the source relative in which .proto file can be found

- --go-grpc_out=. --go-grpc_opt=paths=source_relative: This is the part where we are telling the compiler to store the generated code from source relative

- proto/user.proto: This is the part where we are specifying the file which needs to be executed

This command will build us all the functions, interfaces, and everything that we need to have a client-server response cycle. It will automatically generate the source code from the description and from the logic that we have defined in our proto file. With this process, we are parsing a stream of bytes that represents the structured data.

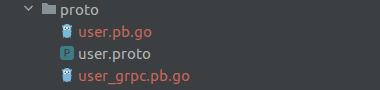

After executing this command, I see there are two additional files added in my proto directory:

- user.pb.go: This is the file which contains the stream of bytes which can be serialized and deserialized.

- user_grpc.pb.go: This is the file which contains the data structure, functions and interfaces generated from the above command.

After we have successfully completed this step, it’s time to compile our grpc-gateway for our reverse proxy server which we are using to Transcode HTTP over gRPC. This can be achieved with this command:

protoc -I . --grpc-gateway_out=logtostderr=true:. proto/user.protoThis command is telling the compiler the exact location where to find the proto file in which we have defined our endpoints and also the location where to store the generated code.

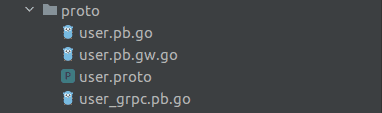

After successfully executing this command, I’ve completed the protocol buffer part and this is how my proto directory looks like:

I’m seeing that the user.pb.gw.go file which contains the grpc-gateway ecosystem was added to my proto directory and now we are ready to use our services.

Moving on to the next part, we will try to explain briefly how the server works and how the services are being used on the server-side.

We will start with the main function and then we will move on to the most exciting part: Hitting the reverse proxy server and performing a Transcode of HTTP to gRPC services.

This is the main block that we are using in our project:

func main() {

// Create a listener on TCP port

lis, err := net.Listen("tcp", ":8080")

if err != nil {

log.Fatalln("Failed to listen:", err)

}

// Create a gRPC server object

s := grpc.NewServer()

// Attach the User service to the server

userpb.RegisterUserServiceServer(s, &userServer{})

userpb.RegisterCreditCardServiceServer(s, &creditCardServer{})

// Serve gRPC server

log.Println("Serving gRPC on 0.0.0.0:8080")

go func() {

log.Fatalln(s.Serve(lis))

}()

maxMsgSize := 1024 * 1024 * 20

// Create a client connection to the gRPC server we just started

// This is where the gRPC-Gateway proxies the requests

conn, err := grpc.DialContext(

context.Background(),

"0.0.0.0:8080",

grpc.WithBlock(),

grpc.WithInsecure(),

grpc.WithDefaultCallOptions(grpc.MaxCallRecvMsgSize(maxMsgSize), grpc.MaxCallSendMsgSize(maxMsgSize)),

)

if err != nil {

log.Fatalln("Failed to dial server:", err)

}

gwmux := runtime.NewServeMux()

// Register User Service

err = userpb.RegisterUserServiceHandler(context.Background(), gwmux, conn)

if err != nil {

log.Fatalln("Failed to register gateway:", err)

}

newServer := userpb.RegisterCreditCardServiceHandler(context.Background(), gwmux, conn)

if newServer != nil {

log.Fatalln("Failed to register gateway:", newServer)

}

gwServer := &http.Server{

Addr: fmt.Sprintf(":%s", os.Getenv("server_port")),

Handler: gwmux,

}

log.Println(fmt.Sprintf("Serving gRPC-Gateway on %s:%s", os.Getenv("server_host"), os.Getenv("server_port")))

log.Fatalln(gwServer.ListenAndServe())

}Since this is the main block under which all the services are being implemented and the reverse proxy server is being handled, I consider that this block deserves more attention. That’s why we will dive deeper into the code trying to explain each part accordingly.

We are going to start with the listener:

// Create a listener on TCP port

lis, err := net.Listen("tcp", ":8080")

if err != nil {

log.Fatalln("Failed to listen:", err)

}Here we are defining a net tcp listener in port :8080 under which the gRPC server will be served.

Moving to the next block:

// Create a gRPC server object

s := grpc.NewServer()

// Attach the User service to the server

userpb.RegisterUserServiceServer(s, &userServer{})

userpb.RegisterCreditCardServiceServer(s, &creditCardServer{})Here we are creating a gRPC server object and we are attaching to the server the UserService and CreditService which we defined in our proto/user.proto file.

Moving to the next block:

// Serve gRPC server

log.Println("Serving gRPC on 0.0.0.0:8080")

go func() {

log.Fatalln(s.Serve(lis))

}()In this block, we are serving gRPC server to the host that we have defined, in our case it’s localhost or more precisely in 0.0.0.0:8080.

Moving further to the next block:

maxMsgSize := 1024 * 1024 * 20

// Create a client connection to the gRPC server we just started

// This is where the gRPC-Gateway proxies the requests

conn, err := grpc.DialContext(

context.Background(),

"0.0.0.0:8080",

grpc.WithBlock(),

grpc.WithInsecure(),

grpc.WithDefaultCallOptions(grpc.MaxCallRecvMsgSize(maxMsgSize), grpc.MaxCallSendMsgSize(maxMsgSize)),

)

if err != nil {

log.Fatalln("Failed to dial server:", err)

}This is the part where we are creating a client connection to the gRPC server we just started in the lines above. This is where the gRPC-Gateway proxies the request.

Note that we are targeting the “0.0.0.0:8080” host under which the gRPC server is being served.

grpc.WithBlock() and grpc.WithInsecure() are being used because we are running this project locally and we don’t have to deal with security or similar stuff.

grpc.WithDefaultCallOptions(...) is being used just to increase the size of the Messages that are being deserialized when we are transcoding HTTP to gRPC.

Moving on to the next block:

gwmux := runtime.NewServeMux()

// Register User Service

err = userpb.RegisterUserServiceHandler(context.Background(), gwmux, conn)

if err != nil {

log.Fatalln("Failed to register gateway:", err)

}

newServer := userpb.RegisterCreditCardServiceHandler(context.Background(), gwmux, conn)

if newServer != nil {

log.Fatalln("Failed to register gateway:", newServer)

}Here, we are telling the server that we are dealing with multiple services and this is the place where we are registering our service Handlers.

Notice that both userpb.RegisterUserServiceHandler(...)and userpb.RegisterCreditCardServiceHandler(...) are pointing to user.pb.gw.go which is the file that was auto-generated once we executed the compiling command.

Inside these services we passing three parameters:

- context.Background() → which is a non-nil empty Context, it is never canceled, has no values and has no deadline. It is typically used by the main function, initialization and tests and also as the top-level Context for incoming requests.

- gwmux → Returns a runtime which is part of grpc-ecosystem under grpc-gateway and a NewServeMux() which returns a new ServeMux whose internal mapping is empty

- conn → connection which will be used by gRPC-Gateway to proxy the requests.

And the last part:

gwServer := &http.Server{

Addr: fmt.Sprintf(":%s", os.Getenv("server_port")),

Handler: gwmux,

}

log.Println(fmt.Sprintf("Serving gRPC-Gateway on %s:%s", os.Getenv("server_host"), os.Getenv("server_port")))

log.Fatalln(gwServer.ListenAndServe())Here, we are just initiating the gateway Server using &http.Server that contains the Address in which the server will be served and the Handler to handle the gwmux defined above.

That’s all from the main block. Now we will move on to explaining in more detail one very important piece of gRPC and gRPC-gateway: The functions.

One thing that needs to be highlighted and it’s worth mentioning is the naming convention.

The naming of the functions defined in the server should match the naming of rpc calls defined in the proto file. That’s how gRPC makes the connection and interacts with the services.

We will take the example of CreateUser which is a rpc call that belongs to UserService. Here we have the code which interacts with the server-side:

func (as *userServer) CreateUser(ctx context.Context, in *userpb.User) (*userpb.User, error) {

user := models.User{Name: in.Name, Email: in.Email, PhoneNumber: in.PhoneNumber}

database.NewRecord(user)

database.Create(&user)

return &userpb.User{Id: uint32(user.ID), Name: user.Name, Email: user.Email, PhoneNumber: user.PhoneNumber}, nil

}The first thing that needs to be mentioned is the naming convention. Notice that the name (CreateUser) of this function on the server-side is the one that matches the RPC call which is defined inside UserService in the proto file.

More precisely, we are talking about this part of code in our user.proto file:

service UserService {

rpc CreateUser (User) returns (User) {

option (google.api.http) = {

post: "/user/create"

body: "*"

};

}If you decide to use another name (for example UserCreate) that is not the same as the one defined in the proto file, you will get a 501 Not Implemented response when you hit the endpoint.

Grpc is also case sensitive and will return the same response if you name the function Createuser instead of CreateUser.

{

"code": 12,

"message": "method CreateUser not implemented",

"details": []

}Now we will move forward and we will try to explain the parameters in the CreateUser function.

func (as *userServer) CreateUser(ctx context.Context, in *userpb.User) (*userpb.User, error)

Inside this function, we are using these parameters:

- (as *UserServer) [type] : as is a pointer to UserServer which is a type that implements User Service Server. More precisely, as points to:

type userServer struct {

userpb.UnimplementedUserServiceServer

}- userpb is the global variable that we use when we are pointing to our proto file

- UnimplementedUserServiceServer is a built-in function of gRPC that is used for having forward compatible implementations and is located in user_grpc_pb.go, the file that was generated after we compiled the proto file.

- ctx context.Context [input parameter]: ctx refers to context.Context which is a built in function which is used if we want the method to be called by multiple goroutines simultaneously.

- in *userpb.User [input parameter]: in is pointing to userpb.User which is the struct which is located in user.pb.go, the file that was generated when we compiled the proto file.

- in *userpb.User [output parameter]: same as above but in here we are telling the function to return a *userpb.User struct as a response

- error [output parameter]: just a built-in interface for representing an error condition with the nil value representing no error

Moving to the next line:

user := models.User{Name: in.Name, Email: in.Email, PhoneNumber: in.PhoneNumber}Here, we are passing by the values we are getting from the HTTP POST call.

- models.User{} is a struct that contains Name, Email and PhoneNumber and is used to interact with the database

- in.Name, in.Email and in.PhoneNumber are the values that are deserialized from gRPC (in is the variable that points to userpb.User in user.pb.go)

Continuing to the next two lines:

database.NewRecord(user)

database.Create(&user)Here, we are interacting with the database using the GORM framework and we are telling PostgreSQL to create a new record in the user table.

database is a global variable that we are using to connect to the database. More precisely, database is pointing to:

var database = db.Connect()Moving towards the end of this block of code:

return &userpb.User{Id: uint32(user.ID), Name: user.Name, Email: user.Email, PhoneNumber: user.PhoneNumber}, nilThis is the place where are telling our function to return a &userpb.User data structure instance inside which we are pairing the user information that we received from database.

Since we have covered lots of code already and now we are assuming that we have some knowledge of how things are working, we are going to run the example we just explained.

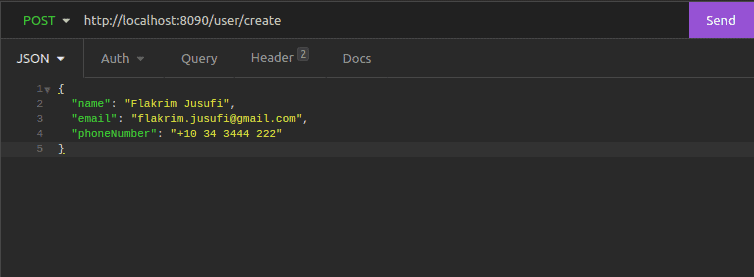

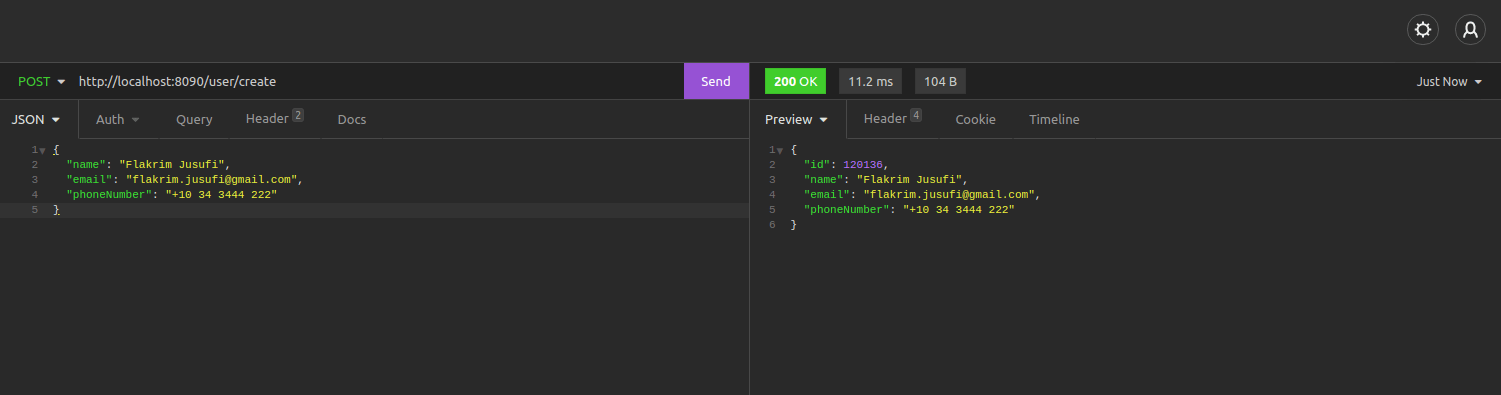

What we are going to do is basically start the gRPC and gRPC-gateway proxy server and send a POST request to it by hitting the /user/create endpoint.

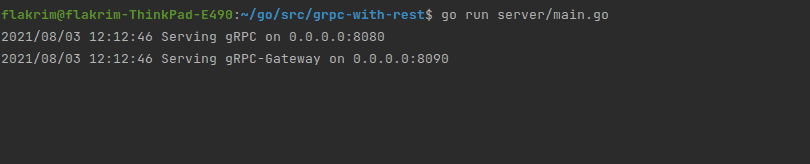

Starting the server with go run server/main.go command and getting the response:

2021/07/30 14:31:15 Serving gRPC on 0.0.0.0:8080

2021/07/30 14:31:15 Serving gRPC-Gateway on 0.0.0.0:8090I’m using Insomnia for sending requests to the server:

We are sending three parameters to the server:

- name (string)

- email (string)

- phoneNumber (string)

Remember this is the endpoint we have defined in our user.proto file:

And this is the message (data structure in user.proto file) which we are using for this request:

After sending a POST request to http://localhost:8090/user/create, that’s how the response from the server looks like:

We’ve got a 200 OK status code from the server and here we have the gRPC JSON style response:

{

"id": 120136,

"name": "Flakrim Jusufi",

"email": "flakrim.jusufi@gmail.com",

"phoneNumber": "+10 34 3444 222"

}Checking the terminal in the server, I’m reading the logs from PostgreSQL database:

[2021-07-30 16:11:59] [3.98ms] INSERT INTO "users" ("created_at","updated_at","deleted_at","name","email","phone_number") VALUES ('2021-07-30 16:11:59','2021-07-30 16:11:59',NULL,'Flakrim Jusufi','flakrim.jusufi@gmail.com','+10 34 3444 222') RETURNING "users"."id"

[1 rows affected or returned ]And yep. We did it!

We have successfully created a user in our database with HTTP REST-ful style using gRPC, protobuf and gRPC-gateway.

GRPC vs REST

We will switch focus now and talk more about the performance of gRPC. I was able to do a comparison between REST HTTP APIs and gRPC and according to my findings, I’ve seen why gRPC over REST is an often choice of many software developers. It is pretty fast and the compression of data is enormous compared with traditional REST APIs.

We prepared a data set of 100K records in PostgreSQL database and we investigated how gRPC and REST are responding when they have to deal with this amount of data. The data set was the same for both gRPC and REST.

The performance of gRPC:

| Number of records | Size of data | Response |

| 10k | 963.9 KB | 120 ms |

| 20k | 1916.5 KB | 270 ms |

| 30k | 3 MB | 270 ms |

| 40k | 3.9 MB | 500 ms |

| 50k | 4.7 MB | 700 ms |

| 60k | 5.7 MB | 750 ms |

| 70k | 6.9 MB | 850 ms |

| 80k | 7.9 MB | 950 ms |

| 90k | 8.8 MB | 1 s |

| 100k | 9.8 MB | 1.10 s |

The performance of REST:

| Number of records | Size of data | Response |

| 10k | 2034.3 KB | 150 ms |

| 20k | 4 MB | 340 ms |

| 30k | 6 MB | 500 ms |

| 40k | 8 MB | 600 ms |

| 50k | 10 MB | 750 ms |

| 60k | 12 MB | 850 ms |

| 70k | 14 MB | 1 s |

| 80k | 16 MB | 1.30 s |

| 90k | 18 MB | 1.50 s |

| 100k | 20 MB | 1.70 s |

As we can see, gRPC is performing slightly better when it comes to speed and is doing tremendously when it comes to the compression of data. When comparing gRPC vs REST speed-wise, we can conclude that:

- gRPC is around 20-25% faster than REST

- gRPC is around 40-50% more efficient (less size of data) compared to REST

Let’s finish our assumption with a high-level comparison between gRPC and REST.

| Topic | gRPC | REST |

| Performance | Around 20-25% faster | Around 20-25% slower |

| Efficiency | Around 40-50% more efficient | Around 40-50% less efficient |

| Protocol | HTTP/2 (fast) | HTTP/1.1 (slow) |

| Payload | Protobuf (binary, small) | JSON (text, large) |

| API architecture | Strict, required (.proto file) | Loose, optional (OpenAPI) |

| Code generation | Supported with built-in (protoc) | Third-party tools |

| Security | TSL/SSL | TSL/SSL |

| Streaming | Client, server, bi-directional | Client, server |

| Client code-generation | Supported (built-in) | OpenAPI + third party tooling |

| Browser support | Not supported (requires grpc-web) | Widely Supported |

Integrating the Two gRPC Services

Moving on to the next topic, we were curious to see how gRPC services interact with each other when they are integrated into a client-server response cycle. We wanted to see how they are performing in circumstances where they need to interact with a database and we were interested to know the entire process.

The architecture:

We have a client-server system in which the client-side interacts with the server-side and the response cycle goes back and forth creating a Remote Procedure Call.

As we can see in the architecture diagram:

- Server contains all the gRPC services and proto generated files.

- Client server contains two different services that will interact with each other.

- UserService is a gRPC service that interacts with users table in our PostgreSQL database.

- CreditCardService is another gRPC service that interacts with the credit_cards table in our PostgreSQL database.

We will go first in our user.proto file to see how UserService looks like, more precisely, the rpc call GetUserByName which we are going to use when interacting with CreditCardService.

The rpc call GetUserByName accepts User message as input and returns again User message as output. All of this is achieved while transcoding HTTP in gRPC through API endpoint GET [/user/findByName/{name}].

Moving on to the next service, CreditCardService:

In here we have two rpc calls:

- CreditCards - which returns a list of CreditCards that belongs to registered users

- GetCreditCardByUserName - which returns Credit Cards information by user’s name

And for the integration between services, we are going to use GetCreditCardByUserName rpc call.

Now let’s go and see how our client-server directories inside the project look like:

Under the client directory we have credit_card_server.go file which contains all the logic on the client-side, meanwhile in the server directory we have main.go file which contains the server logic and all the gRPC services we are going to interact with.

Now we are going to explain some important parts of the code in credit_card_server.go.

Full code in the main block:

func main() {

// Set up a connection to the server.

conn, err := grpc.Dial(address, grpc.WithInsecure(), grpc.WithBlock())

if err != nil {

log.Fatalf("did not connect: %v", err)

}

defer conn.Close()

c := userpb.NewCreditCardServiceClient(conn)

u := userpb.NewUserServiceClient(conn)

//user client

ctx, cancel := context.WithTimeout(context.Background(), time.Second)

defer cancel()

personName := "Tom Muller"

x, err := u.GetUserByName(ctx, &userpb.User{

Name: personName,

})

if err != nil {

log.Fatalf("could not get person data: %v", err)

}

//credit card client

ctx2, cancel := context.WithTimeout(context.Background(), time.Second)

defer cancel()

r, err := c.GetCreditCardByUserName(ctx2, &userpb.CreditCard{

Name: x.GetName(),

})

if err != nil {

log.Fatalf("could not get person data: %v", err)

}

fmt.Println(colorCyan, r)

}Starting with the setup of the connection to the gRPC server and defining the services that we want to interact with:

- userpb.NewCreditCardServiceClient(conn) → refers to the client side of CreditCardService

- userpb.NewUserServiceClient(conn) → refers to the client side of UserService

// Set up a connection to the server.

conn, err := grpc.Dial(address, grpc.WithInsecure(), grpc.WithBlock())

if err != nil {

log.Fatalf("did not connect: %v", err)

}

defer conn.Close()

c := userpb.NewCreditCardServiceClient(conn)

u := userpb.NewUserServiceClient(conn)Moving to the next few lines where we are making use of user client:

//user client

ctx, cancel := context.WithTimeout(context.Background(), time.Second)

defer cancel()

personName := "Tom Muller"

x, err := u.GetUserByName(ctx, &userpb.User{

Name: personName,

})

if err != nil {

log.Fatalf("could not get person data: %v", err)

}Here, after we set up the context we are defining a personName := "Tom Muller" which is the name of the person we want to get the information from.

x, err := u.GetUserByName(ctx, &userpb.User{

Name: personName,

})- u → points to userpb.NewUserServiceClient(conn)

- GetUserByName → the remote procedure call which is part of UserService that we are using to get the user's name.

- &userpb.User { Name : personName } → passing the personName to UserService

And the last part, the credit card client:

//credit card client

ctx2, cancel := context.WithTimeout(context.Background(), time.Second)

defer cancel()

r, err := c.GetCreditCardByUserName(ctx2, &userpb.CreditCard{

Name: x.GetName(),

})

if err != nil {

log.Fatalf("could not get person data: %v", err)

}

fmt.Println(colorCyan, r)In this block of code, after we set up the context we are interacting with GetCreditCardByUserName rpc call which belongs to CreditCardService and we are passing x.GetName():

- x → is pointing to UserService we defined above

- x.GetName() → is the response we have received from the service above (rpc call GetUserByName)

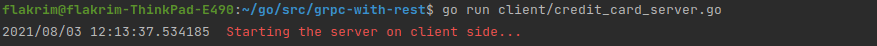

Now we are going to run the servers in two different terminals and we will see the responses.

Starting up the server-side first:

Starting the client-server:

Now from the client side, we are going to send the person's name to UserService and we will communicate with the rpc call GetUserByName.

2021/08/03 12:13:37.534210 Sending person name [Tom Muller] to [UserService] | communicating with rpc call [GetUserByName] |Switching the terminal to the server side and checking the response:

2021/08/03 12:13:37.535027 [userService] - [rpc GetUserByName] -> Received person's name: Tom MullerNow checking the terminal in client side:

2021/08/03 12:13:37.584432 Successfully received person data from Server Side [UserService] | rpc call [GetUserByName] |

___________________________________________________________________________

2021/08/03 12:13:37.584450 Response from the server side [userService] - [rpc GetUserByName]:

___________________________________________________________________________id:120035 name:"Tom Muller" email:"tom.muller@gmail.com" phoneNumber:"4785576361"Now we will again interact with the server by sending the received data to CreditCardService in order to interact with the rpc call GetCreditCardByUserName.

The output in client-side:

2021/08/03 12:13:37.584499 Now sending the retrieved data to [CreditCardService] in order interact with rpc call [GetCreditCardByUserName] and to receive person's credit card informationThe response in server side:

2021/08/03 12:13:37.587228 [creditCardService] - [rpc GetCreditCardByUserName] -> Now sending the response (credit card of selected user) to client side...And here we have the response in client-side:

2021/08/03 12:13:37.587402 Successfully received Credit Card information of selected user from [CreditCardService] by interacting with rpc call [GetUserByName] which belongs to [userService]

____________________________________________________________________________

2021/08/03 12:13:37.587411 Response from the server side [creditCardService] - [rpc GetCreditCardByUserName] :

____________________________________________________________________________

id:99 name:"Tom Muller" email:"ronaldoraynor@bergstrom.org" phoneNumber:"4785576361" address:"37026 Cape borough, West Jones, Utah 17459" country:"Malaysia" city:"Fredmouth" zip:"86850" cvv:"301" created_at:{seconds:1625574830 nanos:191972000}In such a scenario, we have made the output of UserService the input of CreditCardService and we have successfully completed the integration of two different gRPC services.

Note that with a few lines of code we were able to integrate two gRPC services that are doing completely different tasks. The first one is interacting with the users table in the database and the other one is interacting with the credit_cards table.

And check the response time. The difference is just a few milliseconds!

Conclusions

Since we have covered a bit of everything in our experience with gRPC using Go as a language, we have come down to the last part in which we will draw our conclusions. It’s been a long journey and in this document, we have tried to explain only the most important topics.

Coming down to the conclusions, I want to emphasize that they are not intended to shape or change anyone’s opinion of gRPC. My conclusions are based solely on my experience and the things that I’ve touched while working on this project. Everyone can have different experiences and can form their own opinion about this technology.

We will start first with gRPC strengths and the things that have impressed me the most.

Advantages of gRPC

Architecture

I’m a fan of strongly typed languages and I love when things are designed in a strict way following a strict specification of rules. I was impressed by how simple and straightforward the architecture of gRPC was. You can create a robust and lightweight client-server application in just a matter of seconds.

You just have to define your services and the data structure (messages) in the proto file. Everything else is auto-generated when you compile your proto file. No need for further changes or any other additions. Code generation eliminates duplication of messages on the client and server and creates a strongly typed client for you.

Not having to write a client saves significant development time in applications with many services. Grpc is also consistent across platforms and implementations. You can simply build it once in whatever language you want and use it in any other language that gRPC supports. You can build service in Go for example and use it in your Java program.

Performance

One of the things that impressed me the most was the performance of gRPC. I was able to retrieve the entire database (more than 100k records) for something more than 1 (one) second and I was surprised how small the chunk of data was: just 9.8 MB.

In this chunk of data, I was having the user's personal information such as ID, Name, Email, and PhoneNumber and all the users were having real data, not dummy ones with some sort of test strings.

This is huge when you are dealing with a lot of data and a more populated data structure. I was also able to fetch users from the database using Id with a response time of just 1.3 milliseconds. That’s enormous.

The small message payloads that are serialized by protobuf can help you in limited bandwidth scenarios like mobile apps. Since gRPC uses HTTP/2 you can also send multiple requests without having in mind if the requests will block each other.

Streaming

Streaming is one of the strongest points of gRPC. I was fascinated by the way streaming was implemented in gRPC. HTTP/2 provides a foundation for long-lived, real-time communication streams. All the way around, it’s first-class support which is provided.

A gRPC service supports all streaming combinations and will allow you to use different types of streaming:

- Unary (no streaming)

- Server to client streaming

- Client to server streaming

- Bi-directional streaming

As described in the above section, we were able to do a Client to server streaming and the response cycle is in milliseconds. Never seen that before anywhere else. And the good thing about it is that you can have a streaming service with just a few lines of code.

Disadvantages of gRPC

Lack of maturity

One of the things that concerned me the most while using gRPC was the lack of maturity. It seems to me this technology is still too young and still in the first years of development. With minimal developer support outside of Google and not many tools created for HTTP/2 and protocol buffers, the community lacks information about best practices, workarounds, and successful stories.

I was facing some basic issues while developing this project and I had to research all over the place seeking solutions. The ones that wasted most of my time were importing some third-party dependencies which I had to import manually inside my project. There was no other solution. And the solution was found while reading comments of developers that faced the same issue inside the community because neither I nor them couldn’t find any documentation or something similar to solve this problem.

Another issue that I had was to find the proper command in order to compile the grpc-gateway proto file. You may miss a dependency in your project, you can have a syntax error or something similar but almost never get to know what exactly is going on because the error messages are missing some important details. And there’s no official documentation that explains in detail what each value in the command does. At least I couldn’t find any.

The last one that I faced was also a basic one. Since gRPC uses only timestamps, I had to convert timestamps to Time in Go. And I had to go all over the place again just to find a proper solution because all the solutions that I found were already deprecated. Then I found out that in the last update of the package that you have to use to do this conversion, the naming of the package itself was changed from pbtype to timestamppb. And the syntax was no longer the same.

The maturity of specific technology can be a big hurdle to its adoption. That’s apparent with gRPC too. But this is likely to change soon. I prefer to keep the good feelings that I had with gRPC and to remain positive. As with many open-source technologies backed by big corporations, the community will keep growing and will attract more developers.

Limited browser support

This is also one major concern when you’re about to decide if you want to use this technology. Since gRPC heavily uses HTTP/2 features and no browser provides the level of control required over web requests to support a gRPC client, as of now that we are writing, it’s impossible to directly call a gRPC service from a browser.

No browser today allows a caller to require that HTTP/2 be used or provide access to underlying HTTP/2 frames. To achieve something like this, you need to use a proxy, which has its own limitations. There are currently two implementations:

- gRPC web client by Google - a technology developed by Google team that provides gRPC support in the browser. It allows browser apps to benefit from the high-performance and low network usage of gRPC but unfortunately, not all features are supported. Client and bi-directional streaming isn’t supported and there is limited support for server streaming.

- gRPC web client by the team from The Improbable - a TypeScript gRPC-web client library for browsers that abstracts away the networking (Fetch API or XHR) from users and code generated classes.

They both share the same logic: A web client sends a normal HTTP request to the proxy, which translates it and forwards it to the gRPC server.

Steeper learning curve

I think we all agree that every new technology that shines and tries to revolutionize the way we build software takes time to learn and to adapt.

Compared to REST or GraphQL for example, which primarily use gRPC to JSON, it will take some time to get acquainted with protocol buffers and find tools for dealing with HTTP/2 friction. It doesn’t even matter how many years of experience you have or how many applications you have built until now. You will struggle.

And that’s why so many teams will choose to rely on REST for as long as possible because finding a gRPC expert is hard. To be honest, I don’t even think there’s any because it’s still a new technology and I have the feeling that everyone who has tried it, is still taking the learning steps and not using it in a production environment.

And..That’s all from gRPC JSON Golang!

I hope this documentation helped you to understand this technology and now you do have a basic knowledge about gRPC JSON transcoding.

We are all still new to this technology and we are still taking the learning steps. I don’t think there’s someone outside who can state that he is an expert on gRPC transcoding because there are lots of things you need to learn.

I would say it’s a good experience and definitely, I would encourage everyone to give it a try.

You can take my project as an initial step: https://github.com/flakrimjusufi/grpc-with-rest

Thank you for coming all the way down!